Data Architecture Strategy

Document

type: Micro-report

Author:

Alistair Goodall BSc(Eng)Hons ACA

Publication

Date: 14/03/2017

-

Abstract

Data architecture can be defined as the set of rules, policies, standards and models that govern and define the type of data collected and how it is used, stored, managed and integrated within an organization and its database systems.

This paper addresses the challenges of applying a data architecture strategy to a major project organisation lifecycle. It would be of relevance to any IT professionals or system administrators working on major projects.

-

Read the full document

Introduction

Crossrail project data has existed from the 1990s when the route was first safeguarded, through to 2005 when the Crossrail Hybrid Bill was presented, 2008 when the Crossrail Act was passed and will continue to December 2018 as the project hands over to the operational Elizabeth line.

The requirements of each phase of the project have been different in terms of processes, scale and data. Many of the data processes and concepts used have evolved over the life of the project using lessons learned. The scale of the Crossrail supply chain has also been used to help drive best practises throughout the industry.

Crossrail has collected data to enable the handover of an assured railway (Physical and Digital) and this has been enabled through a series of contractual deliverables and associated management processes.

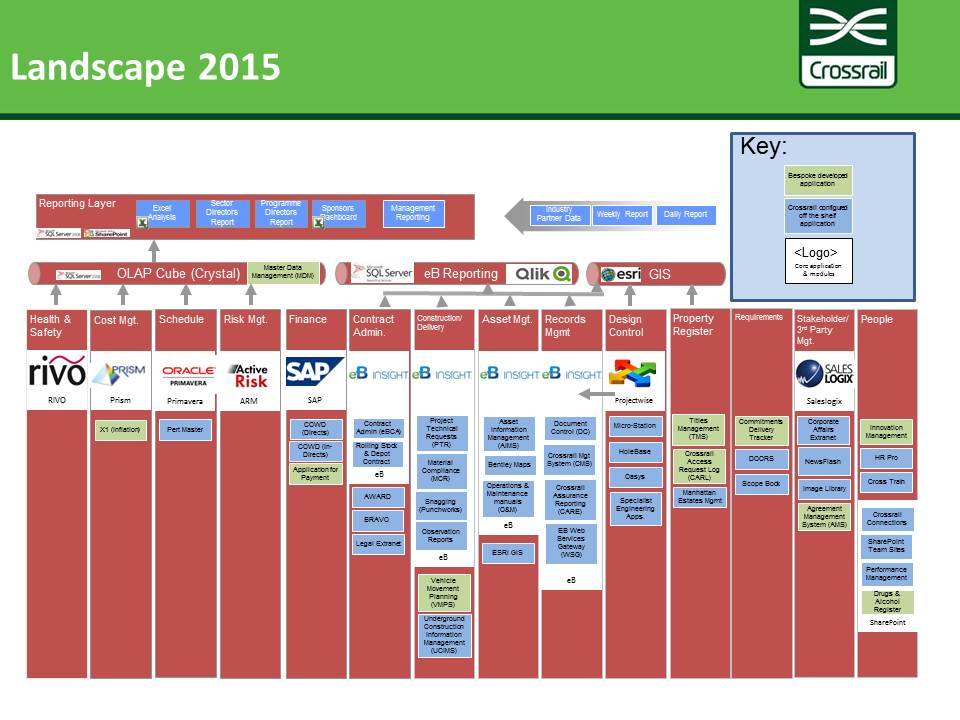

The Crossrail Data Architecture was developed to support this level of change, support the business and not act as a brake on progress. To achieve this, Crossrail data architecture was aligned to and evolved with the core teams of the Crossrail organisational model which allowed individual teams to adopt innovative and highly effective data solutions for their business processes. Figure 1 below shows the various applications employed:

Figure 1– Crossrail data architecture

Data Models

Crossrail does not have a centrally enforced data architecture but instead relies on a number of discrete applications which have a more consistent data model where they are managed through the same core team.

An example of a Directorate with a more consistent data model is Programme Controls where a common Work Breakdown Structure (WBS) is employed within Programme Planning (Primavera) and Cost Management (Prism) applications. Risk (ARM) and Health and Safety (RIVO) are then reported by contract and/or location which allows for consolidation with the cost and schedule data through a custom Online Analytical Processing or OLAP data warehouse. The learning legacy master data management paper details this approach.

Initially the data model for the enterprise data warehouse was specified to enable board reporting across a number of processes included Finance data (SAP) and contract management data (AssetWise) but with the common data element being contract there was no scope for more detailed analysis and it proved simpler to consolidate manually at contract level so the feeds were subsequently dropped.

The lack of a common data model between the Programme Controls applications and the core contract management application led to manual reconciliations between the compensation event cycle in contract administration and the cost/schedule management process in programme controls.

Within the data model Contract codes were both ubiquitous and consistent across most applications. Contract based reporting could be rolled up into the prevailing organisational structure during the delivery phase, but was less useful when it came to the handover phase (e.g. from a geographic breakdown of East, Central and West to a facility breakdown).

Locations were less consistent with different organisational naming conventions and an internal change to naming conventions between Design and Main Works which was then a lost opportunity to integrate data across systems. Geospatial information associated with locations and assets was not propagated out to/from other systems but instead managed centrally through a GIS database which enriched data from other applications with GIS geometries.

Rules, Policies and Standards

The vast majority of deliverables are provided by contractors as a contractual requirement and this allows for an enforceable set of rules, standards and policies contained in the Crossrail Management System. Central to the data architecture is the Quality Management Policy which states:

“Management system records – all certificates, reports, measurements, methodologies etc. shall be maintained in eB the Crossrail electronic document management system. All significant project documentation shall be held in eB. Records shall comply with requirements defined in document control procedures. Records shall be sufficiently detailed to provide assurance of compliance with all requirements“

The Crossrail electronic document management system (Bentley AssetWise) is not restricted to a document centric view and so is able to accommodate a significant set of business processes creating a single inter-related source of truth for Crossrail, contractors and third parties. This spans:

– Contract management (NEC3 and bespoke Rolling Stock & Depot)

– Crossrail management system

– Assurance Reporting

– Materials Compliance

– MEP Design Review

– Project Technical Requests

– Snagging

– Observation Reporting

– Central Communications

– Asset Inventory Information

– Contract deliverables

– Common site filing

– Drawing approval (interfaced from ProjectWise)

– Handover (Assets and documentation)The AssetWise architecture allows system administrators to configure workflows and specify the types of data to be collected for each business process. End users are then able to input data and to relate/re-use objects across and within business processes which gives a high level of integration of data and leads to efficiencies across and within contracts.

Similar policies mandated contractor provided data for Settlement Monitoring, Health and Safety, Schedule, Vehicle Movement Planning, Property Access Requests and Agreements Management. Where policies were not enforced the quality of data and process was reduced but when enforced they allowed for standard processes and reporting within each dataset.Whilst processes around deliverables could be mandated for handover, the requirements originate from the recipient organisation, and the responsibility for the production of the data is with Crossrail. This is for the purposes of:

- Operations (e.g. Asset data, O&M manuals)

- Enduring management processes associated with the Crossrail legal entity

- Learning legacy (e.g. monitoring data for the London area)

- Statutory data retention

The specifications for this data are all external and the work to identify the exact requirements is increasing significantly in the final 3 years as the major Civils work coming to an end.

In addition the contract code and work breakdown structures which were at the core of the deliverables data were largely irrelevant to the handover data so there was a significant retrospective exercise to identify requirements and then review existing data to identify where it was relevant before grouping and moving it as appropriate.

Data Usage, Storage and Integration

Unstructured Data

Unstructured data within Crossrail was held electronically in network shares, SharePoint team sites, emails and email archives. There was a preference from within IT for teams to use SharePoint rather than network shares but this decision along with those around access, retention and any folder/naming/versioning/meta-data conventions were left with the individuals and organisations concerned.

The data strategy was clear in that unstructured data could not be a deliverable .All deliverables were processed through the Enterprise Document Management System (EDMS), but until the final years did not define what unstructured data was relevant to handover.

By leaving the ownership and management of unstructured data to DIrectorates the IT overhead to manage data was kept very low (e.g. less than 1 FTE for SharePoint support) and organisations were able to work unencumbered.

Structured Data

Structured data is held within applications and the data architecture strategy was devolved to the applications and business process owners in terms of the data collected and how it is used then stored.

Some of the most significant data stores were developed and managed outside of the core IT team (e.g. CAD, GIS and Ground Movement Monitoring) which allowed for innovation, cost savings and flexibility but mitigated against any centralised view of data structures.

Tier 1 contractors would often have their own processes, applications and structured data which would need to be passed through to the relevant Crossrail application/process. Where there were standards (e.g. CAD and AGS) or bespoke interfaces (e.g. EDMS to EDMS) this improved the overall efficiency of the end to end process.

Data Integration

Data was integrated via:

- Holding multiple complete business processes in a single application (AssetWise) and using SQL Server Reporting Services to view

- Replicating into the Programme Controls data warehouse (Cost, Schedule, Risk, H&S) and using Excel to view

- Replicating into the GIS Database and using the GIS (ESRI) to view

- Consolidation through manual reporting processes and using Word/Excel to do this

- Passing CAD files from ProjectWise through to AssetWise as rendered Pdf’s (viewable without specialist software)

The most significant challenges to integration occurred within applications, where users were able to take shortcuts (e.g. load a pdf list for a register of documents rather than relating each item within the application), and across applications where data exchanged was not subject to master data management (MDM).

Lessons Learned and Recommendations for Future Projects

From the outset define a data retention strategy encompassing statutory requirements, deliverables and handover which includes:

- Appropriate storage environment

- Retention period

- Appropriate meta-data

- Metrics to manage the quality control of the data

Engage with the end owner/operator as early as possible to anticipate handover requirements and to help carry any data innovations through to operations.

Standardising contract related workflow processes into a single information environment was a very big success but would be improved byproviding contractors with a mechanism to interface their own back end processes.

Mandate data formats, specify exactly how to use applications and provide core data reporting from the outset of each business process and anticipate future processes to look for commonality.

Do not under estimate the significant investment required in training end users, particularly in a project environment with a high staff turnover.

Recognise and respect the business as best placed to architect their specialist solutions but focus on the interface with other teams/applications to provide standards and governance.

-

Authors

Alistair Goodall BSc(Eng)Hons ACA - Crossrail Ltd

Alistair is an experienced Finance/IT professional with an excellent understanding of business process, management information needs, project management and systems implementation.